A Better Way to Identify and Respond to Misinformation Campaigns

Framework for responding to misinformation threats

Luke Bacon

A Framework for Assessing and Responding to Misinformation and Disinformation Campaigns in the Digital Age

This is the second blog post in a two-part series. To explore the issues shaping our information ecosystems and challenging democracies worldwide, read part 1 here.

Those providing crucial services and campaigning on a range of important issues encounter misinformation and disinformation all the time, generally as part of their everyday social media and news reading rather than through dedicated monitoring. Typically, a team member will come across problematic content, perhaps on platforms like TikTok, and promptly share it with the team. Often, the initial response action that comes to mind is something like, ‘We need to debunk this!’. Some form of conversation quickly comes together where the team works out what to do.

This is an important moment where research and action potentially meet, but very often things go astray. In these rapid discussions, which could just be a few instant messages back and forth, there is often a lack of information and/or assessment of:

a) the risk posed;

b) the actual current spread of the problematic content (How many people are exposed? Which people specifically? Why them?);

c) the range of possible strategic response actions available, beyond debunking.

We’ve had multiple civil society partners describe this scenario in which it is extremely difficult to know what threats are worth spending limited resources on, and how to handle them without accidentally making things worse. A sense of paralysis can easily set in and teams can struggle to confidently draw on their experience and creativity.

While there is no shortage of organisations monitoring and tracking misinformation campaigns, Purpose has observed a gap between this important research and how it is used to inform effective action.

To bridge the gap between research and action, we have developed a Response Model to assess and plan the best action against online threats, bringing structure and direction to get to better conversations faster. Currently, Purpose’s Response Model can be used by individuals and teams and consists of a simple framework that provides direction on how to respond to an online threat, such as a disinformation campaign. It takes users all the way from interpreting a threat to confidently taking action, guided by best practices. It is intended to be used collaboratively, giving teams a common language and orientation to assess and act on threats.

Our Online Threats Response Model provides specific strategic direction for different kinds of misinformation threats.

Guiding Action Through Assessment and Strategic Response

The model supports users with two major steps, assessment and response.

First, rather than just saying ‘look at this terrible thing on Twitter’, researchers are guided to assess threats according to stable definitions of potential impact and reach. This side of the Model builds on a Threat Matrix first introduced to us by GQR, which in turn incorporated Ben Nimmo’s Breakout Scale. It is also similar to risk or assessment frameworks that you and your team might be familiar with.

GQR’s Threat Matrix enables the assessment and prioritisation of online threats by their current reach and potential impact.

Threat assessment can be done by researchers, but we’ve found some of the most useful assessments happen when a range of stakeholders are involved and well briefed. This is because ‘potential risk’ means different things to those facing the problem from different standpoints. By structuring this part of the process to include a briefing and different perspectives, we’ve facilitated powerful moments where incorrect assumptions are surfaced and could be corrected.

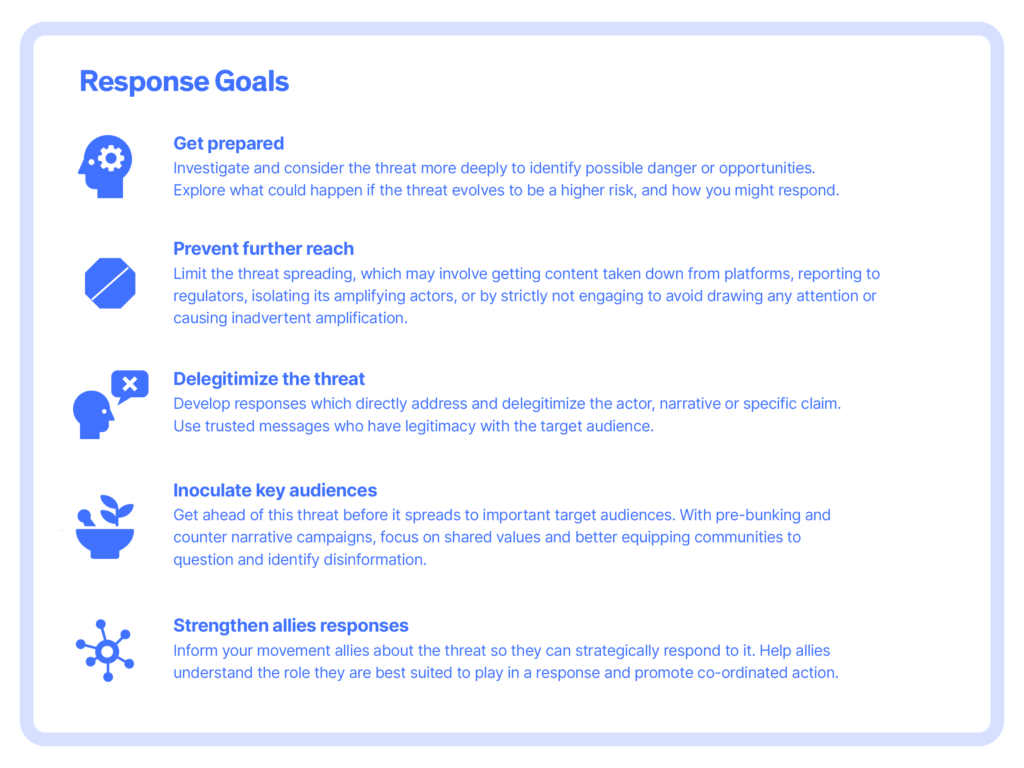

Secondly, once a threat is assessed, the Response Model provides direction about the most appropriate strategy to counter the specific threat at hand. There are two key aims:

a) To help teams avoid accidentally amplifying low-rated or low-reach threats; and,

b) To introduce a broader range of research-backed strategies that get teams thinking and drawing on their experience, creativity, and connections.

An example of this in action is when a team of climate campaigners pivoted from a blunt fact-checking approach (that risked drawing attention to the problematic content) to an ‘inoculation’ approach to build a particular audience’s capacity to recognise and navigate misinformation campaigns. Using ‘trusted messengers’ and key influencers, they were able to reach the target audience that mattered. This strengthened connections rather than alienating audiences.

In practice, the model ranks several Response Goals in each cell on the Threat Matrix that teams could pursue. The priority rating for each goal gives an indication of its appropriateness in addressing this particular type of threat. These are intended as a starting point to help teams design strategic interventions. They are based on a combination of academic research, best practice, and our campaigning experience.

Customizing Tactics Based on Context and Resources

The model provides a clear goal to aim for, and then the specific tactics to reach that goal can be designed based on the context, geography, audiences, and creative deployment needed. This is important because every threat has a unique character and context, and every responder has their unique skills and resources for action. Compared to a more prescriptive approach that directs the use of certain tactics (e.g. ‘report content to the platform’), we have found that by recommending broader strategic goals to aim for, or be wary of, we better support our partners’ creativity and get a more rigorous and action-oriented discussion.

Our accompanying resource provides examples and guidance on pursuing and assessing action towards these goals. With this basic guide in mind, individuals, teams, or coalitions can then discuss how their specific skills and resources might achieve these goals in their context.

The Response Goals provide a pathway toward taking confident action to counter misinformation campaigns

Advantages of using the Response Model to counter misinformation campaigns

By providing a scaffold for assessing and planning counter actions, we’ve found the Response Model helps teams deal with emergent online threats in several ways:

- Reducing ambiguity – through a common language, structure, and orientation to discuss online threats and how to action them

- Providing a starting point for interventions – through prioritising response goals backed by research to reduce guesswork in responding to online threats

- Countering the instinct to correct – the tendency to directly respond to or correct misinformation is often an ineffective and detrimental response

- Bridging research, campaigns, and other functions – ensures research more directly informs campaign design and campaign learnings flow back into research design

- Helping allocate resources effectively – allows teams to use the resources and relationships they have at hand to confidently take action

We’ve used our Response model, with clients, and to facilitate how to plan coordinated rapid responses between multiple teams and partners.

We’re continuing to deploy and iterate the response model, alongside other tools and infrastructure to help those fighting online threats on behalf of progressive causes.

To learn more about how our unique response model works, download the campaigner’s guide to combating misinformation or reach out to us here.