Disinformation Campaigns are Evolving: What You Need to Know

New strategies needed against evolving disinformation

Justine Szalay, Luke Bacon, Andrea De Almeida

Evolving Deception: Unveiling Insights into the Dynamics of Disinformation Campaigns

It is no big revelation that the online world now includes a mass of inaccurate, decontextualized, sensationalist, and hostile content. The widespread circulation of this material makes it harder for people to effectively participate in civic life and for us to make collective progress on many urgent crises. This challenge is a symptom of a number of problems in our information ecosystems, including:

- The decline of local journalistic reporting disempowers communities and feeds conspiracy thinking;

- The ongoing impact of disinformation campaigns, its spread, and combination, (eg the blending of climate disinformation and vaccine conspiracy in the baseless ‘Climate Lockdown’ alarm)

- An onslaught of misdirection in advertising, resulting in an abundance of greenwashing and unfounded claims. (eg. the $1 Billion spent by oil and gas majors in the three years following the Paris Agreement) and,

- Social media platforms amplify and incentivise outrage, and rail-roading people into more extreme content.

These factors are shaping our information ecosystems and challenging democracies worldwide. We need to both build our capacities to navigate and counter the impact of disinformation campaigns within this context, and also work to fix these problems and change the situation over time. In our work, we have discovered two key insights that support this goal.

Insight 1: We have a new type of ‘bad but sophisticated actor’

By bad actors, we’re not talking about reality TV stars. We are talking about people and organisations that are seeding and spreading misinformation to deceive people and further their own interests.

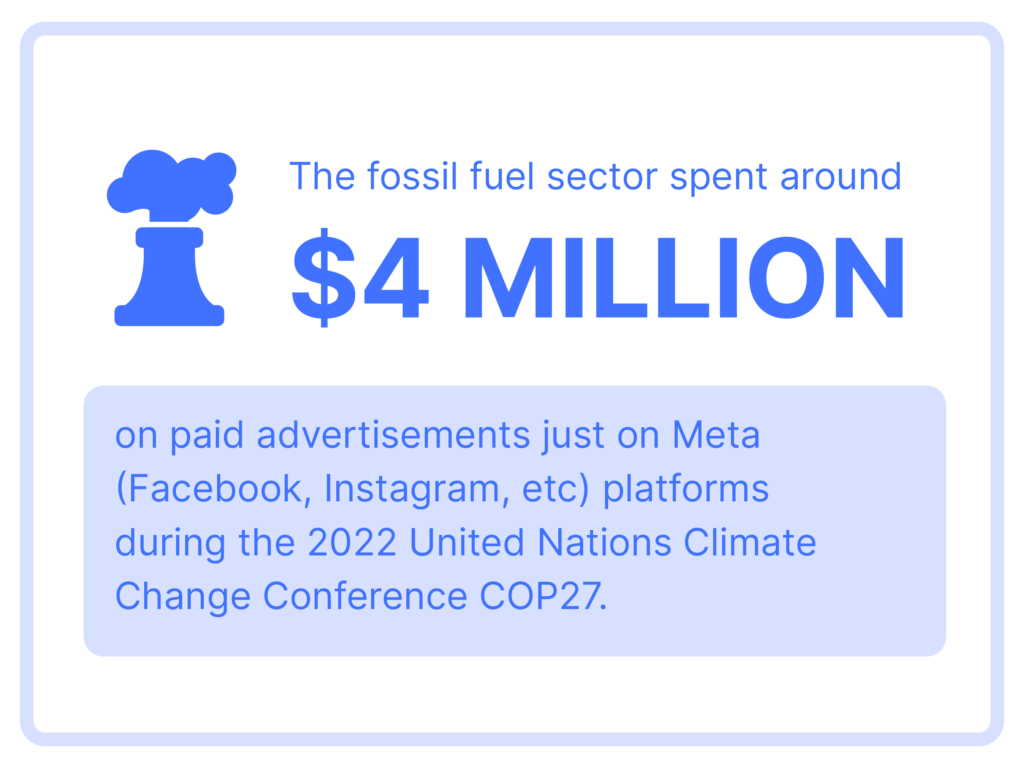

A recent report by the Climate Action Against Disinformation Coalition (CAAD), of which Purpose is a member, revealed the problem’s extent in the context of the climate crisis. It showed that entities linked to the fossil fuel sector spent around $4 million on paid advertisements on Facebook and Instagram (Meta platforms) alone in the weeks leading up to the United Nations Climate Change Conference COP27 in November 2022, a global gathering to progress state commitments to reducing emissions and other climate action.

Disinformation campaigns can take many forms. Alongside the familiar tactic of climate denial, the COP27 research highlighted a number of sophisticated online approaches:

- Greenwashing: misleading people by overstating the environmental action and credentials of some of the world’s worst polluters through advertising.

- Manipulating search results: climate denial hashtag #climatescam was significantly boosted by an automated account and became a top search result on Twitter for people searching ‘climate’, despite being used by fewer and fewer followed accounts than other relevant hashtags.

- ‘Delayism’: arguments that appear to accept that climate change is happening, but argue for reducing and deferring any action to mitigate it, by shifting responsibility, pushing inadequate solutions, and fear-mongering about the risks of emissions reduction programs.

The confusion generated by fossil fuel interests and their allies works to delay and prevent efforts to mitigate the worst impacts of the climate crisis they created. These are just one variety of the bad actors we face, who are willing to intentionally deceive and exploit vulnerabilities in our information ecosystems to protect their interests—at any cost.

Insight 2: Effectively countering disinformation requires us to bridge the gap between research and action

A gap exists between the groups who are monitoring and tracking (researching) misinformation and the many community stakeholders and institutions who are well-positioned to commit resources and take counteraction. This is creating several problems.

Firstly, researchers who are on the pulse of unfolding threats are often left to take action on their own. This becomes especially problematic if they lack the strategic communication skills necessary to combat disinformation effectively or if they aren’t known and trusted by relevant audiences. This is crucial—we know that to effectively cut through misinformation, responses must come from trusted voices who are connected and accountable to targeted communities. Otherwise, they risk making things worse.

Secondly, the key stakeholders who are trusted by targeted communities often go uninformed about evolving disinformation threats, leading to a failure to take action. Significant threats can fly under the radar and take hold. In other instances, key stakeholders are aware of disinformation campaigns, but they are not being thoroughly considered, again leading to reactions that can easily backfire and amplify the problem.

Purpose has observed great confusion about the best way to effectively counter emergent disinformation. Many organisations use the common method of debunking/fact-checking or jumping into battle in the comments section. These responses only have merit in specific circumstances and frequently fail to engage audiences that matter because they don’t trust the fact-checkers.

We also see an overall disconnect between short-term efforts to combat urgent threats, and the longer-term work needed to improve the overall health of information ecosystems, such as policy, cultural, and technological change. As a result, communities continue to be inundated with unhelpful and hostile content that undermines their interests. Toxic patterns repeat, driven by vested interests and opportunistic actors with little to slow them down.

These are larger trends we see, but this gap between research and action shows up in the everyday work of a wide range of stakeholders in these challenges. This brings us to an important practical question: how can disinformation research be used to effectively combat these threats?

This is the question we have been increasingly exploring over the last 18 months, working on our understanding and developing tools to connect research with action within the everyday work of campaigners, advocates, researchers, policymakers, and others who face these challenges.

In our next post, we share what we’ve found to be a powerful tool for teams fighting for progressive causes on a range of issues, including climate justice. Read it now.

Over the last 15 years Purpose has been at the forefront of creating communication strategies and campaigns to tackle some of the world’s largest problems in the areas of climate and environment, democracy and human rights, equity, public health, childhood, and youth.

Across all these issue areas, we have witnessed the rapid surge and increasingly sophisticated online use of misinformation and disinformation to stall, slow and combat the efforts of those who are fighting the good fight.

In response, we have developed a suite of practices to both monitor and assess these threats, and to support partners in making confident strategic actions.

We have developed a unique “Response Model” to assess, prioritise and, most importantly, respond to misinformation threats. To learn more, contact us.